Druid

http://druid.io

Druid (from Yahoo!) is a column-oriented open-source distributed data store designed to quickly ingest massive quantities of event data, making that data immediately available to queries - real-time data.

Fully deployed, Druid runs as a cluster of specialized nodes to support a fault-tolerant architecture where data is stored redundantly and there are multiple members of each node type. In addition, the cluster includes external dependencies for coordination (Apache ZooKeeper), storage of metadata (Mysql), and a deep storage facility (e.g., HDFS, Amazon S3, orApache Cassandra).

Druid's native query language is JSON over HTTP, although the community has contributed query libraries in numerous languages, including SQL.

Limited power compared to RDBMS/SQL and doesn't support joins or distinct count (inspired by Google Dremel). But very quick to answer predefined questions.

Limited power compared to RDBMS/SQL and doesn't support joins or distinct count (inspired by Google Dremel). But very quick to answer predefined questions.

Presto

https://prestodb.io

Presto (from Facebook) is an open source distributed SQL query engine for running interactive analytic queries against data sources of all sizes ranging from gigabytes to petabytes.

Presto was designed and written from the ground up for interactive analytics and approaches the speed of commercial data warehouses while scaling to the size of organizations like Facebook.

Presto was designed and written from the ground up for interactive analytics and approaches the speed of commercial data warehouses while scaling to the size of organizations like Facebook.

Presto allows querying data where it lives, including Hive, Cassandra, relational databases or even proprietary data stores. A single Presto query can combine data from multiple sources, allowing for analytics across your entire organization.

Samza

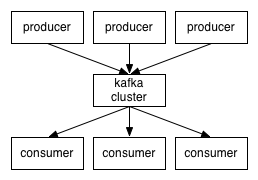

Apache Samza is a distributed stream processing framework. It uses Apache Kafka for messaging, and Apache Hadoop YARNto provide fault tolerance, processor isolation, security, and resource management.Drill

https://drill.apache.orgSchema-free SQL Query Engine for Hadoop, NoSQL and Cloud Storage.Drill is designed from the ground up to support high-performance analysis on the semi-structured and rapidly evolving data (including JSON) coming from modern Big Data applications, while still providing the familiarity and ecosystem of ANSI SQL.

Dynamic queries on self-describing data in files (such as JSON, Parquet, text) and HBase tables, without requiring metadata definitions in the Hive metastore.

Drill supports a variety of NoSQL databases and file systems, including HBase, MongoDB, MapR-DB, HDFS, MapR-FS, Amazon S3, Azure Blob Storage, Google Cloud Storage, Swift, NAS and local files. A single query can join data from multiple datastores. For example, you can join a user profile collection in MongoDB with a directory of event logs in Hadoop.