Hadoop 2.0

6/8/14 - Hadoop Maturity Summit:

http://gigaom.com/2014/06/08/hadoop-maturity-summits/

Yarn

Part of Hadoop 2.0 Yarn (Yet Another Resource Manager) is a resource manager that enable non MapReduce jobs to work on Hadoop and leverage HDFS. YARN provide a generic resource management framework for implementing distributed applications.

MapReduce only allows batch processing, but YARN unlock the real time data processing on Hadoop.

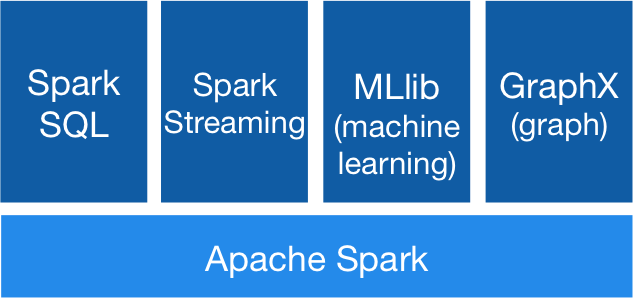

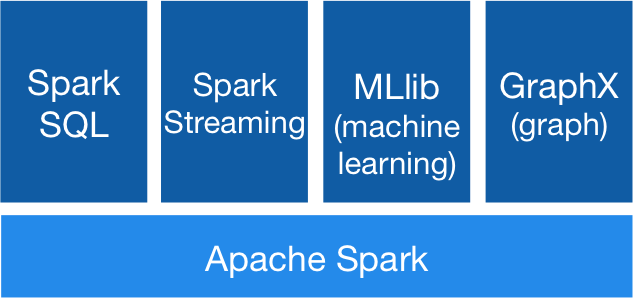

Apache Spark is a fast and general engine for large-scale data processing. It offers high-level APIs in Java, Scala and Python as well as a rich set of libraries including stream processing, machine learning, and graph analytics.

Spark powers a stack of high-level tools including

Shark for SQL,

MLib for machine learning ,

GraphX and

Spark Streaming.

MLib

MLib is spark's scallable machine learning library. It fits into Spar's APIs and interoperates with

NumPy in Python. It can leverage any Hadoop data source (HDFS, HBase or local files).

Machine Learning Library (MLib) guide: http://spark.apache.org/docs/latest/mllib-guide.html

Cassandra is a massively scalable open source NoSQL. Cassandra is perfect for managing large amounts of structured, semi-structured, and unstructured data across multiple data centers and the cloud. Cassandra delivers continuous availability, linear scalability, and operational simplicity across many commodity servers with no single point of failure, along with a powerful dynamic data model designed for maximum flexibility and fast response times.

Cassandra sports a “masterless” architecture meaning all nodes are the same.

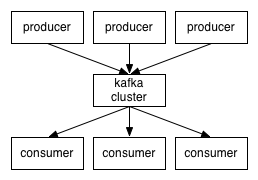

Kafka is a distributed pub/sub and message queuing system.

Kafka consumes streams called topics that are partitioned and replicated across multiple machine named brokers.

A single Kafka broker can handle hundreds of megabytes of reads and writes per second from thousands of clients. Kafka is designed to allow a single cluster to serve as the central data backbone for a large organization. It can be elastically and transparently expanded without downtime. Data streams are partitioned and spread over a cluster of machines to allow data streams larger than the capability of any single machine and to allow clusters of co-ordinated consumers. Kafka has a modern cluster-centric design that offers strong durability and fault-tolerance guarantees. Messages are persisted on disk and replicated within the cluster to prevent data loss. Each broker can handle terabytes of messages without performance impact.

Basic messaging terminology:

- Kafka maintains feeds of message in categories called topics.

- Process that publish message to a Kafka topic is called producer.

- Process that subscribes to topics and process the feed of a published message is called consumer.

- Kafka is run in a cluster comprised of one or more servers each of which is called a broker.

Storm

http://vimeo.com/40972420

HBase

http://hbase.apache.org/book.html#other.info.videos

Use Apache HBase™ when you need random, realtime read/write access to your Big Data. This project's goal is the hosting of very large tables -- billions of rows X millions of columns -- atop clusters of commodity hardware. Apache HBase is an open-source, distributed, versioned, non-relational database modeled after Google's

Bigtable. Apache HBase provides Bigtable-like capabilities on top of Hadoop and HDFS.

HDFS is a distributed file system that is well suited for the storage of large files. Its documentation states that it is not, however, a general purpose file system, and does not provide fast individual record lookups in files. HBase, on the other hand, is built on top of HDFS and provides fast record lookups (and updates) for large tables.

Both Cassandra and HBase are NoSQL databases, a term for which you can find numerous definitions. Generally, it means you cannot manipulate the database with SQL. However, Cassandra has implemented CQL (Cassandra Query Language), the syntax of which is obviously modeled after SQL. Cassandra uses the Gossip protocol for internode communications, and Gossip services are integrated with the Cassandra software. HBase relies on Zookeeper -- an entirely separate distributed application -- to handle corresponding tasks.